Navigating real-world environments virtually with the same level of precision, detail, and interactivity experienced in person. It may sound futuristic, but this is what Spatial Computing technologies offer—it leverages advanced algorithms to create 3D models of real-world environments, enabling users to interact with data in a more immersive way. And, despite its sci-fi definition, the potential applications are incredibly efficient, especially in industries like oil and gas, mining, refineries, and other large-process operations. Still, how can real-world scenarios be precisely recreated in a virtual setting?

In most cases, when a reality-based 3D model is built, it doesn’t represent a 100% accurate environment replica, requiring an in-depth space reconstruction from inputs such as measurements, historical data, sensors, and imaging. For this purpose, Neural Radiance Fields (NeRFs) and Gaussian Splatting techniques generate cloud points of relevant spatial coordinates identified as missing within the 3D model to help compose a complete perspective of the environment. And, while both serve to reconstruct a three-dimensional scene from sparse two-dimensional images, they differ in their approaches. So, what sets them apart?

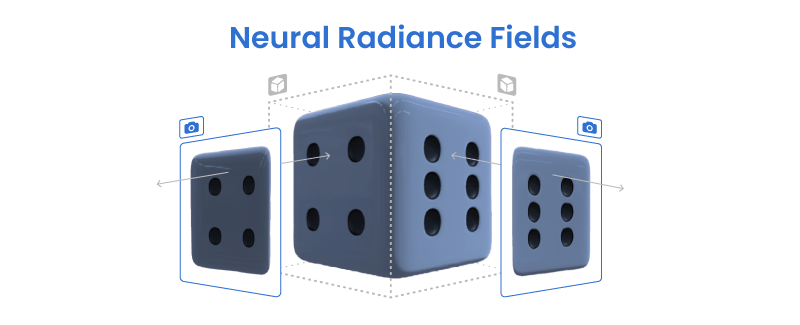

Neural Radiance Fields (NeRFs)

First, let’s talk about NeRFs, or Neural Radiance Fields. This algorithmic model’s strength lies in its ability to create novel views of an environment from 2D images. Essentially, it takes a set of images and fills in the gaps by estimating what the scene would look like from different perspectives that weren’t originally captured.

NeRF can recreate a 3D model that provides view angles and details not visible in the original images with just a few snapshots of an environment. This is achieved through deep neural networks, which estimate how light would behave in unseen parts of the scene, generating realistic views from any perspective.

The article ‘’NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis’’ first presented NeRFs as a method for synthesizing novel views of complex scenes by optimizing a continuous volumetric scene function using a sparse set of input views. In this context, these new ‘’novel’’ views describe color and volume density for every point and every viewing direction in the scene. Thus, the previously ‘’sparse’’ three-dimensional scene is now a ‘’dense’’ scene representing an environment virtually with high fidelity.

However, according to a Tsinghua University study, Neural Radiance Fields (NeRF) have achieved unprecedented view synthesis quality using coordinate-based neural scene representations. Despite that, NeRF’s view dependency can only handle simple reflections such as highlights but cannot deal with complex reflections such as those from glass and mirrors. In this scenario, NeRF models the virtual image as real geometries, leading to inaccurate depth estimation and producing blurry renderings when the multi-view consistency is violated.

Gaussian Splatting

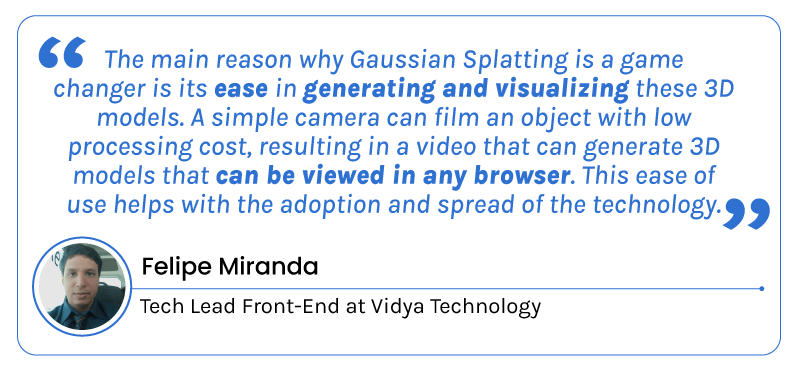

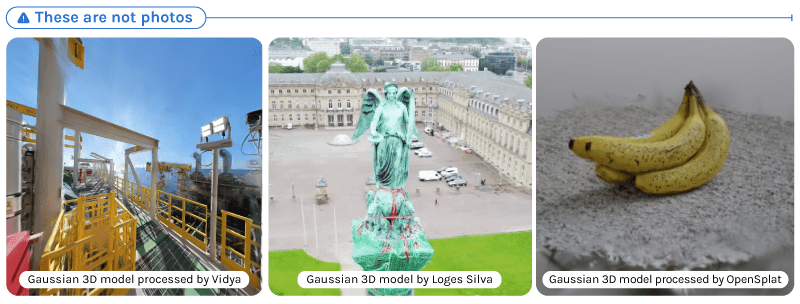

On the other hand, Gaussian Splatting serves a similar purpose to NeRF but works differently. While NeRF estimates new views of a scene, Gaussian Splatting focuses on real-time rendering by storing 3D information as Gaussian points— spatial coordinates representing volumetric information about the environment.

The article ‘’3D Gaussian Splatting for Large-scale Surface Reconstruction from Aerial Images’’ stated that in contrast to NeRF-based methods, Gaussian Splatting uses 3D Gaussian primitives. Thus, by inputting coordinates—like the 3D position and viewing direction—into these primitives, the system learns to associate these coordinates with the appropriate color and opacity values. And, by adjusting the positions, rotations, and scales of these points, the model is accurately trained.

Gaussian primitive is essentially a mathematical representation of a point in space, characterized by its position, size, color, and opacity. These primitives are modeled as 3D Gaussian points, which allow them to occupy a volume in space rather than just a single point.

In this context, these primitive points establish the relationships between the parameters and the observed data, ensuring that the scene reconstruction is accurate. This optimization process contributes to a more faithful representation of the 3D scene when viewed from various angles. Then, these Gaussian points are “splatted” onto a 2D plane based on the camera’s position, and rasterized to generate per-pixel colors, creating a fast and responsive image. This method uses GPU acceleration to render scenes in real-time. And, due to high-speed rasterization on GPUs, this method can render faster than NeRFs.

Gaussian Splatting stands out in 3D scene reconstruction by ensuring that the scene can be rendered with high fidelity and responsiveness. And, despite both techniques having the same intended use, Gaussian Splatting is being perceived as a more practical space-reconstruction tool than NeRF due to its fast rendering and short input requirements. This combination of speed and accuracy makes this technique a powerful tool for applications ranging from virtual reality to industrial simulations, ultimately enhancing the user experience in navigating and interacting with 3D environments.

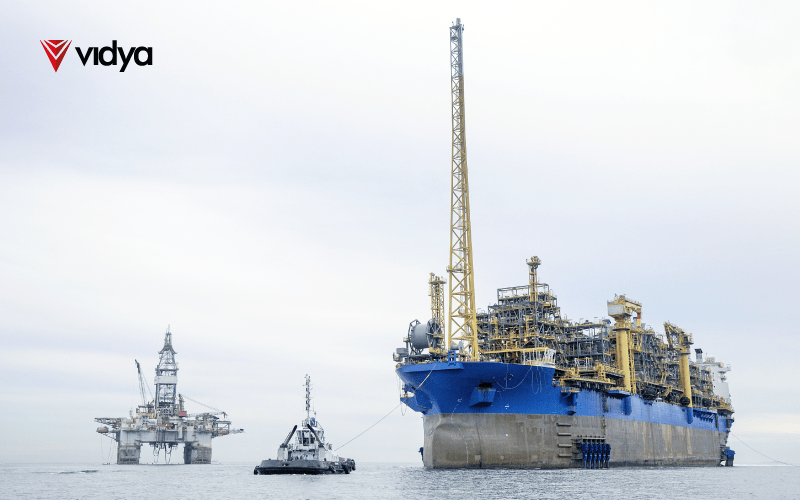

Industrial applications

So, how do these technologies impact large-scale industries? Both NeRF and Gaussian Splatting provide fluid 3D representations of complex environments. Indeed, according to Ph.D. Machine Learning scientist Conrad P. Koziol, building these 3D replicas helped shift the conversation to more intuitive ways of understanding the work being done in an industrial context (rather than through a report or set of slides), implicating in a sense of ‘being there’.

Furthermore, Spatial Computing techniques enable using space as an index to information. With a 3D representation, the index becomes located in the environment. And, to retrieve critical information about a particular component in the scene, navigation is done spatially, bringing up the closest images. This capability accelerates problem-solving in industrial scenarios, providing immediate, data-driven insights in an immersive format.

Beyond that, safety being a top priority for any large-process industry, the ability to accurately map and monitor high-risk environments in real time is fundamental for these operations. In this sense, these technologies allow continuous and up-to-date monitoring of critical areas—helping to prevent accidents before they happen.

These tools allow users to achieve precise visualization, fluid navigation, and seamless interaction between physical and digital elements, blurring the boundaries between reality and virtuality. And, as industries continue to adopt these tools, we’re likely to see fewer accidents, faster response times, and overall better decision-making when it comes to maintaining and managing complex environments. Essentially, the future of industrial innovation lies at the intersection of these digital and physical realms, where precision, safety, and efficiency are not just enhanced but redefined.