In the past 2 years, many aspects of modern life have changed with the advent of Generative AI. What before could be interpreted as impossible, is now part of people’s daily routines worldwide. Chatbots like ChatGPT, Gemini, and Copilot are enhancing the human ability to perform tasks, automating many of them and reducing the time required to perform complex activities. One of the most impressive capabilities of these chatbots is their ability to engage in human-like conversations. That means computers will mimic how humans talk, comprehending the meaning of words and sentences. And, based on their acquired knowledge, the model will provide an answer under the context of the conversation. Central to this approach is NLP(Natural Language Processing), which combines machine learning and deep learning models to the science of natural languages, specifically linguistics.

The relation between Natural Languages and NLP

Humans talk and that may be one of the most differentiating aspects from other species. Humans have a complex system of communication. Within that system, we have different languages each with a complex system. Understanding how humans communicate is a science in itself. Now Imagine mimicking that to computational models. That’s what NLP is all about. According to IBM, Natural language processing (NLP) is a subfield of computer science and artificial intelligence (AI) that uses machine learning to enable computers to understand and communicate with human language. Even though it is revolutionary, mimicking human language is far from simple. To better understand how NLP works, and how magnificent it is, we must first understand how human language works.

Within the science of Natural Language lies the field of Linguistics. The science of language is posed with the challenge of mapping and understanding the many aspects of human communication. Thus, we will soon realize that linguistics could be applied to the many levels of communication from the sound we produce to how we write words and sentences, the meaning of all that, and beyond. All of those instances should be considered for NLP development. For example, nowadays we have models capable of understanding written text as models capable of recognizing speech. Both of them are under the umbrella of linguistics but in different areas.

Human communication starts with the sound humans can produce. The first noises of a baby are the beginning of a journey to acquiring language. Meanwhile, the sounds we produce come with an implied meaning. That means we attribute ideas to sound, and eventually to a word. Then, the word combination will result in sentences with different and more complex meanings from the isolated words.

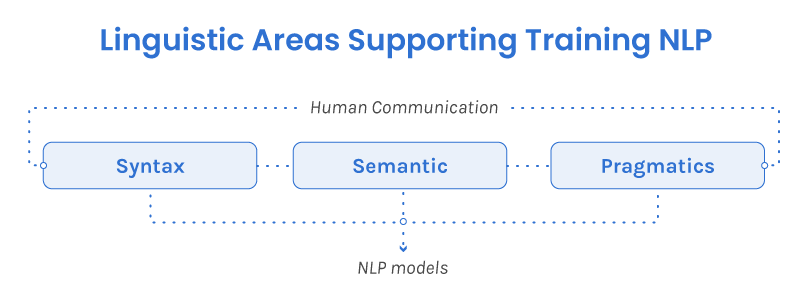

The logic behind training NLP models is similar. The first step is to make computers understand the meaning of sounds, words, and how their combination will influence the expressed idea. For that, the most relevant areas of linguistics supporting the training of models are semantics and syntax.

Syntax will categorize words and organize them into a sentence structure. It’s from syntax that grammars are built. It’s also syntax that will guide the position of words in a sentence, ruling their correlation. When translating this to NLP development, the syntax will rule how computers should answer a question within a finite combination of words to uncountable possibilities of sentences coming from words combined, for example.

In this sense, the model will be capable of identifying correct word positioning within a sentence and be autonomous in choosing from its dataset the most appropriate word to be used in the sentence. This will ultimately suggest where each word should be positioned and how their position on a sentence will influence the final idea. Of course, this will vary according to the trained language. For instance, models responding in English will have different syntaxes from models responding in German or Spanish.

Not less important than that comes semantics. Semantic will look at the content of a given sentence and based on logic rules understand the meaning of those sentences. This area will humanize chatbots and provide them with the ability to understand the nuances of human-like speech and understanding of conversations.

Beyond syntax and semantics, pragmatics plays a crucial role in NLP. Pragmatics focuses on how language is used in context, considering factors such as social environment, speaker intentions, and cultural nuances. It helps to understand the implied meanings behind words and sentences, even when they are not explicitly stated.

For example, consider the phrase “It’s cold in here.” This statement can have multiple meanings depending on the context. If said in a room with a thermostat, it might be a request to turn up the heat. However, if said during a conversation about winter weather, it could simply be a statement of fact. Pragmatic understanding enables NLP models to interpret such nuances and respond appropriately.

As NLP continues to evolve, researchers are exploring ways to further incorporate pragmatic understanding into models. This involves developing techniques to analyze social context, identify speaker intentions, and account for cultural differences. By doing so, NLP models can become even more adept at understanding and generating human-like language, leading to a wide range of applications, from customer service chatbots to language translation tools.

Speech Recognition and NLP Applications

Speech recognition, another possible application of NLP, involves converting spoken language into text. It leverages phonetic information, which is the study of the sounds humans can produce in a language. Thus, by analyzing the acoustic features of speech, speech recognition models can identify individual phonemes (basic units of sound) and map them to corresponding letters, words, and meaning under context. This way the model becomes capable of interacting and providing responses based on the sound .

Training NLP Models

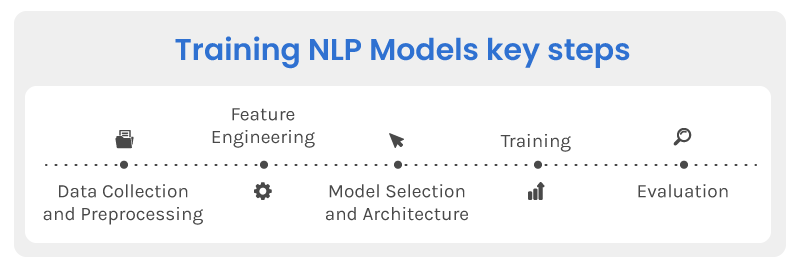

Training NLP models involves exposing them to vast amounts of text data and teaching them to understand the underlying patterns and structures of language. This process typically involves several key steps:

- Data Collection and Preprocessing: Gathering a large dataset of text corpora, such as books, articles, or transcripts, is essential. This data is then preprocessed to clean it, tokenize it into individual words or sentences, and normalize it to ensure consistency.

- Feature Engineering: Extracting relevant features from the text data is crucial for training the model. These features can include word embeddings, n-grams, or syntactic information.

- Model Selection and Architecture: Choosing an appropriate NLP model architecture, such as recurrent neural networks (RNNs) or transformer models, is essential. These models are designed to process sequential data like text.

- Training: The model is trained on the preprocessed data using supervised learning techniques. This involves feeding the model input data and corresponding target outputs (e.g., correct translations or classifications) and adjusting the model’s parameters to minimize the error between predicted and actual outputs.

- Evaluation: The trained model is evaluated on a separate validation dataset to assess its performance. Metrics like accuracy, precision, recall, and F1-score are commonly used to measure the model’s effectiveness.

Linguistics plays a crucial role in guiding the development of NLP models. Concepts from linguistics, such as syntax, semantics, and pragmatics, help inform the design of features, model architectures, and training techniques. For example, understanding syntactic structures can aid in building models that can parse sentences correctly, while semantics can help models grasp the meaning of words and phrases.

NLP models have a wide range of applications, including:

- Machine Translation: Translating text from one language to another.

- Sentiment Analysis: Determining the sentiment expressed in text (e.g., positive, negative, neutral).

- Text Summarization: Generating concise summaries of longer texts.

- Question Answering: Answering questions posed in natural language.

- Chatbots and Virtual Assistants: Creating conversational agents that can interact with users.

These applications are having a significant impact on various industries, from customer service and healthcare to education and entertainment. The development of NLP requires a strong collaboration between linguistics and computer science. Linguists provide insights into the structure and meaning of human language, while computer scientists develop the algorithms and techniques necessary to process and understand language. The ongoing collaboration between these two fields is essential for advancing the state of the art in NLP.