A world where computers can understand the environment around them simply by looking. This isn’t science fiction anymore. It’s the new frontier of artificial intelligence called Computer Vision. It can perceive its surroundings and interpret visual information to identify objects, colors, shapes, and areas. This technology consists of a model that learns information from multidimensional data, such as images or videos, enabling machines to develop a visual perception like humans. Today, Computer Vision systems can analyze vast amounts of visual data with incredible accuracy. But how exactly was Computer Vision developed? How does it work? And what are the potential benefits for businesses?

Introducing Computer Vision

The first steps in Computer Vision date back to 1959, when researchers David Hubel and Torsten Wiesel studied pattern recognition and image processing with the article “Receptive fields of single neurons in the cat’s striate cortex”. However, it is known that there is no single developer of the Computer Vision concept, it is a multidisciplinary field that has evolved with contributions from several researchers and institutions worldwide.

However, certain fundamental features make Computer Vision what it is. The Hough Transform, for example, is an algorithm proposed by Paul Hough in 1962 used for detecting lines and geometric shapes in an image. In 1982, David Marr, a British neuroscientist, published another influential paper — “Vision: A Computational Investigation into the Human Representation and Processing of Visual Information” which consolidated the idea that vision processing doesn’t start with separate object identification, but with 3D representations of the environment to allow interactions. These researches paved the way to deeply comprehend how visual processing works and how to replicate it artificially.

Around 1999, most researchers stopped trying to reconstruct objects by creating 3D models of them (the path proposed by Marr). That’s when David Lowe’s work “Object Recognition from Local Scale-Invariant Features” stated that visual recognition systems use features invariant to rotation, location, and, partially, changes in illumination. According to Lowe, these features are somewhat similar to the properties of neurons found in the inferior temporal cortex that are involved in object detection processes in primate vision. However, the main catalyst for Computer Vision development is, without a doubt, R&D funding from Big Techs such as IBM, Microsoft, Google, and Amazon.

Despite understanding how it began, technological improvements allowed Computer Vision to be refined and reach the state-of-the-art of today’s AI. According to IBM, the current capacities of Computer Vision allow computers to derive meaningful information from digital images, videos, and other visual inputs. Furthermore, this technology functions through high-definition cameras that capture images from the surrounding environment. Then, the algorithm is trained with specific datasets, aiming to enhance the model’s autonomous object identification capabilities.

How can Artificial Intelligence see things?

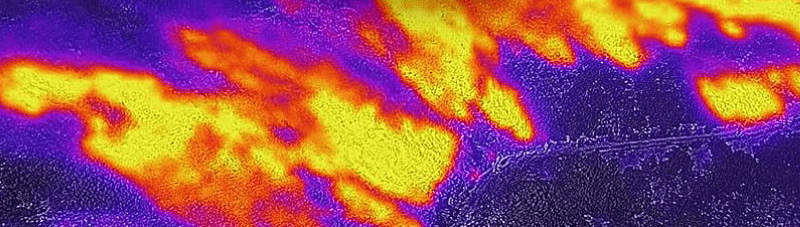

To autonomously identify objects and patterns, cameras alone won’t do the job. Computer Vision requires appropriate image processing and continuous model training. Besides that, an adequate processor is needed to support an I/O (Input / Output) for communicating the information acquired from the captured images. In these conditions, AI can analyze data with amplified sensitivity to magnetic rays, achieving levels of infrared, ultraviolet, and X-rays, enabling the system to learn complex patterns and trends that humans can’t. And, despite acknowledging Computer Vision’s main requirements, the specific steps of how AI dissects images might still not be clear.

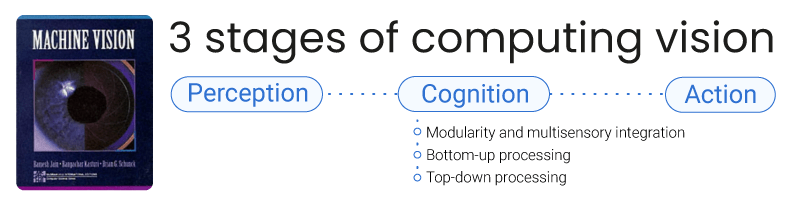

In this regard, Ramesh Jain presented in 1995 in the book ‘’Machine Vision’’ that Artificial Intelligence could be viewed in the same way as human cognition, having three stages in the Computer Vision process: perception, cognition, and action. Indeed, according to the article ”Advancing Perception in Artificial Intelligence through Principles of Cognitive Science”, the concept is broader, including the following steps:

1) Sensory stimulation (Perception): The first step in the perception process is to take in a sensory input signal, which contains different forms of signals (such as visual and auditory).

2) Organization (Cognition): The sensory inputs are then processed in various stages in specialized perceptual pathways

a) Modularity and multisensory integration: Features are hierarchically processed in different modules or areas of the computer/brain, giving rise to highly specialized functionality, all of which are integrated to form a common concept. Different modalities, such as vision, audition, and touch consist of complementary information and are integrated at various stages, hence improving the robustness of perception.

b) Bottom-up processing: The sensory input is passed through various stages of processing in a bottom-up fashion, where specific information (attributes) are extracted and combined to form complex concepts, relations, and patterns.

c) Top-down processing: The perceptual pathway is not a fixed collection of processes but is dynamically guided by other high-level cognitive processes, such as attention and memory, in a top-down fashion of processing information.

3) Interpretation (Action): Processed sensory information is then combined with prior knowledge and cues through prediction and inference to give more informed results.

The interconnectedness of these three steps showcases how the Computer Vision process was made to mirror the cognition of a human brain. Furthermore, the system perceives visual data, cognitively processes and interprets this data, and then decides on an action based on the interpretation. More specifically, Computer Vision processes images as information through visual refinement algorithms, where filters are used to enable the recognition of pre-established patterns and features in the images.

In this context, filters are programmed to extract the most relevant information for a specific task. This means that the system can precisely extract information about the object’s shape, color, and area, facilitating the identification of the established rule. Finally, through the essence of any AI, the system learns from experience, constantly adjusting and improving its behavior. Thus, it is clear that Computer Vision represents a game-changer to businesses by providing a digitized alternative to observative work, but what are the benefits of its application?

The benefits of Computer Vision

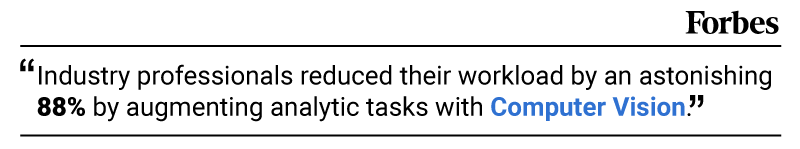

The most significant advantage of Computer Vision is its ability to streamline what used to be time-consuming, expensive, and exhausting tasks. In this regard, by extracting valuable patterns from visual data, Computer Vision can significantly reduce the workload required for specific tasks. According to Forbes, industry professionals reduced their workload by an astonishing 88% by augmenting analytic tasks with Computer Vision. Thus, how can industries benefit from Computer Vision?

Automation and efficiency

Observation-related activities can often be repetitive and exhausting, leading human inspectors to miss subtle defects or inconsistencies due to fatigue. Computer Vision, on the other hand, analyzes images based on algorithms trained through extensive datasets, leading to higher speed and consistency, automating and increasing efficiency in complex tasks. According to a TechJournal survey, 45% of businesses see opportunities for cost-cutting and efficiency gains with Computer Vision adoption.

Enhanced recognition and accuracy

Besides that, Computer Vision systems can analyze vast amounts of visual data with high accuracy, reducing errors and improving overall process reliability. These capabilities can help increase safety and process control by detecting early minimal errors that could lead to hazards. The article ‘’Computer Vision and Machine Learning-Based Predictive Analysis for Urban Agricultural Systems’’ reported that Computer Vision in agriculture reaches a Mean Absolute Percentage Error (MAPE) within the range of 10–12% in forecasting air humidity and soil moisture from thermal images. By leveraging this technology, farmers can gain valuable insights into critical environmental factors, allowing them to optimize actions such as irrigation and growing conditions.

For instance, Computer Vision can help address Personal Protective Equipment (PPE) detection by ensuring workers adhere to safety regulations and wear the necessary gear. The algorithm can be trained to recognize various types of PPE, such as helmets, gloves, masks, and safety glasses. Thus, these systems can instantly alert supervisors or halt operations if any worker is found without the required equipment. The article ‘’Personal Protective Equipment Detection: A Deep-Learning-Based Sustainable Approach’’ stated that Computer Vision detected whether workers were wearing helmets correctly with an accuracy of over 90%.

source: https://geoagri.com.br/public/storage/blog/imagem6101d6f7c2f3e.jpeg

Continual Learning

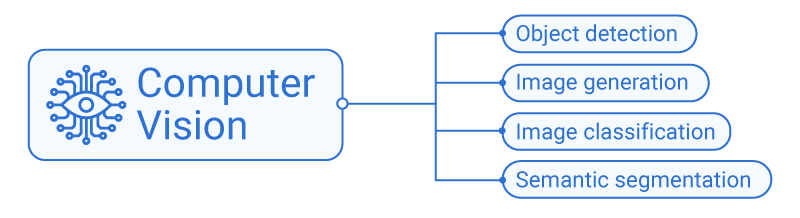

However, Computer Vision’s potential lies not just in its impressive abilities, but also in its inherent potential for further improvement. This potential lies in the core of any AI, Machine Learning, in which the capacity for continuous improvement is referred to as Continual Learning. Indeed, this autonomous learning methodology is present in all the Computer Vision steps, allowing algorithms to adapt and improve as they encounter new information, without forgetting what they already know. According to the article ‘’Recent Advances of Continual Learning in Computer Vision: An Overview’’, recently, a large number of continual learning methods have been adopted for various Computer Vision tasks, such as image classification, object detection, semantic segmentation, and image generation.

Vidya’s Approach to Computer Vision: detecting and classifying integrity anomalies, autonomously

According to Amazon, AI is changing the way performance management is conducted using neural networks so it can be applied in different fields. Neural networks applied to images and videos use inputs to be trained on, filtering useful information into their structure that will be transformed into outputs at the end of their analysis. With these trained neural networks, companies can perform object recognition, monitoring, classification, image reconstruction, creating new images, and facial recognition, among other things.

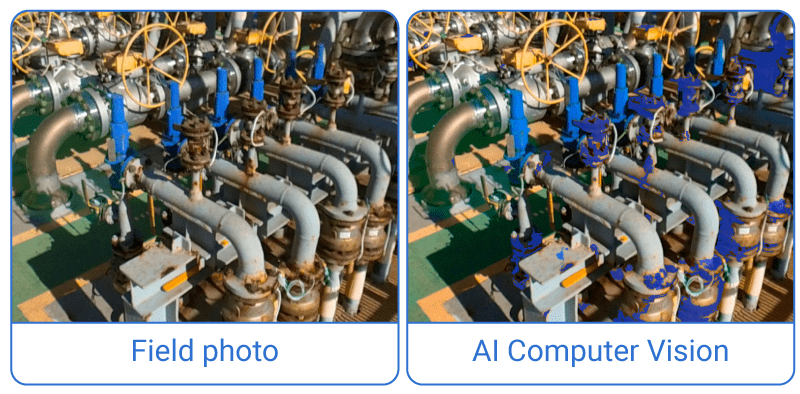

Considering the available capabilities, Computer Vision emerges as a game-changer solution to industrial inspections. By leveraging Reality Capture and image analysis, Computer Vision systems can continuously monitor equipment health, and detect subtle anomalies exceeding normal limits, corrosion, cracks on surfaces, or misalignments in moving parts. And, given the need for more efficient and reliable reporting techniques in the energy industry, Vidya Technology presents a deep tech solution that offers the best of AI’s Computer Vision for anomaly identification within complex industrial environments.

The Vidya System Platform offers an automated inspection process supported by Computer Vision and customizable dashboards, reports, prediction models, 3D models, and simulation capabilities for the identification of anomalies that may go unnoticed by conventional inspection methods. Beyond that, using Computer Vision models makes constant monitoring of the object’s conditions possible, allowing operators to determine the anomaly’s scalability and overall impact accurately. This technology has been reducing costs and unplanned downtime while allowing workers to prioritize what matters most.

In practice, the application approach consists of contextualizing reports, documents, technical drawings, and other kinds of data in a 3D environment. Then, with the image and sensorial inputs (Reality Capture), the AI Computer Vision processes the images to autonomously identify and classify visual anomalies, such as pitting, potential discontinuities, welding, and corrosion in a 3D model. Therefore, using the information to generate work order inputs and support for maintenance plans.

What does the future say about Computer Vision?

Within this scenario, what makes Computer Vision have immense potential isn’t just its ability to identify patterns autonomously, but its ability to keep learning from previous experiences. Grand View Research reports the value of the global Computer Vision market is expected to reach $19 billion by 2027, up from just over $11 billion in 2020. This way, decision-making professionals can prioritize what they find more useful in their AI diagnosis, avoiding wastes of time or other resources and increasing accuracy and efficiency. Furthermore, it won’t be long until this technology is integrated into all processes of society, reaching a scenario in which AI technology facilitates the decision-making of every citizen.